Welcome to Part 3 of our AI in FinTech series. In Part 1, we explored how AI is reshaping the future of financial innovation, and in Part 2, we examined real-world use cases bringing those innovations to life. Together, these insights show why AI is no longer optional in FinTech; it’s already redefining markets.

Now, as artificial intelligence (AI) becomes deeply embedded in the DNA of modern financial services, the challenge for founders, architects, and investors is no longer whether to adopt AI, but how to build and scale it responsibly. Integration, not experimentation, is now the focal point.

AI banking solutions can enhance financial access, automate compliance, reduce fraud, personalize experiences, and predict risks with exceptional speed and precision. But building AI-native FinTech platforms goes beyond stitching algorithms together; it requires intentional design across architecture, compliance, governance, explainability, and human collaboration.

Over the past two decades, I’ve witnessed dozens of AI implementations across lending, banking, insurance, and payments. While some exceeded expectations, others failed due to oversight, inadequate planning, or a lack of accountability. This blog consolidates those lessons into an actionable guide for anyone serious about building the next generation of FinTech innovations.

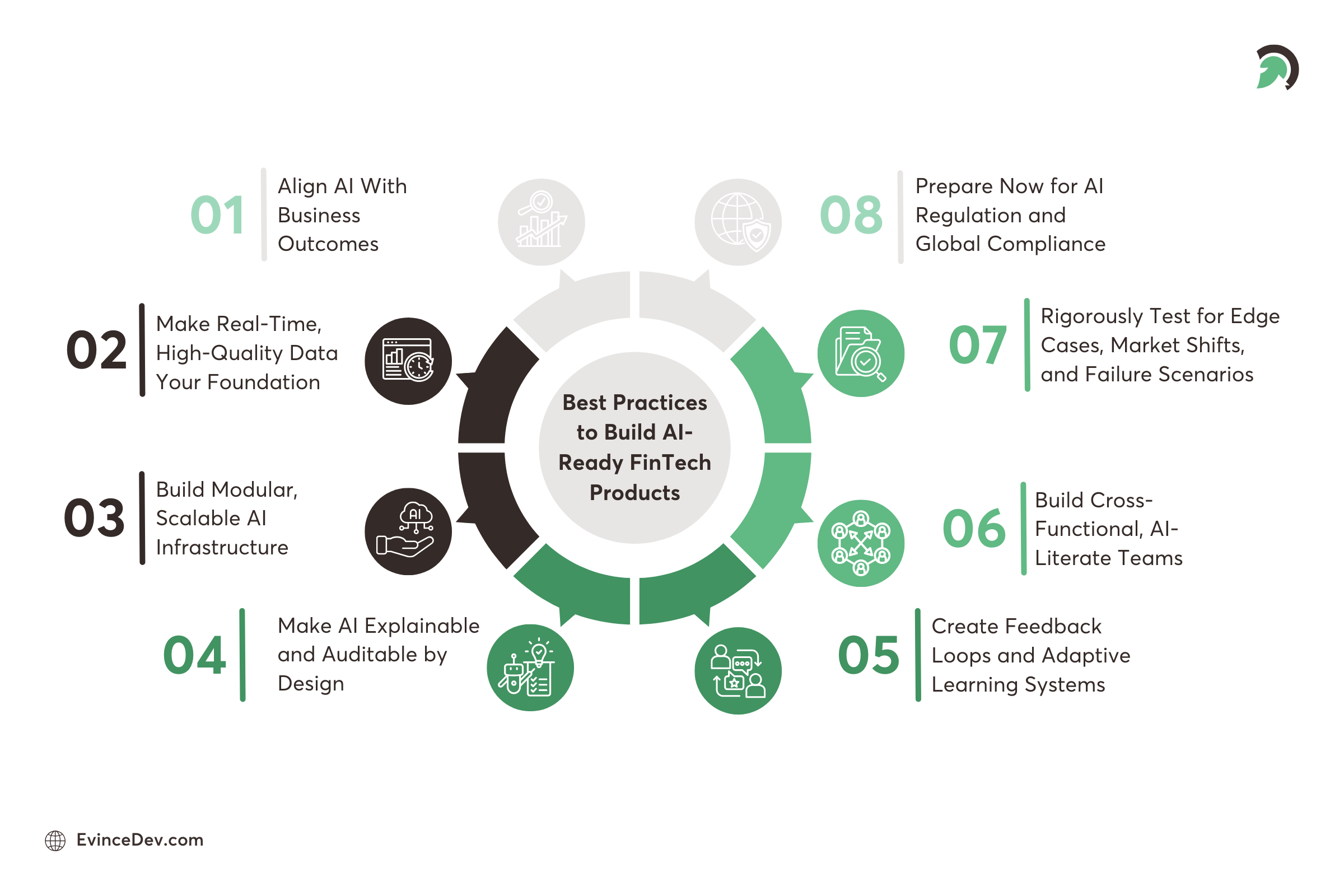

Factors to Consider When Building & Implementing AI-Ready FinTech Products

1. Align AI With Business Outcomes, Not Buzzwords

A critical mistake many organizations make is adopting AI for its novelty instead of addressing a real necessity. The starting point for AI should be a clear business problem with measurable KPIs.

Before building models, ask:

- What core metric are we trying to improve?

- Is the objective to cut fraud losses, speed up loan approvals, boost customer retention, or broaden credit access?

- Can this process be improved with intelligence and automation?

Sample Scenario:

A digital lender aiming to cut loan approval time from 48 hours to under 10 minutes can consider implementing Optical Character Recognition (OCR), Natural Language Processing (NLP), and real-time credit modeling across the onboarding funnel.

Clarity on purpose ensures AI development is tied to ROI, not novelty.

Quick Stat:

A recent industry study from Money20/20 found that 76% of financial services firms have already launched at least one AI initiative, signaling that adoption is no longer optional but essential.

2. Make Real-Time, High-Quality Data Your Foundation

An AI system is only as good as the data it trains and learns from. “Garbage in, garbage out.” That’s especially true in finance, where outdated, siloed, or biased data can lead to incorrect approvals, compliance violations, or a loss of customer trust.

Key actions:

- Enrich internal CRM and transaction data with external behavioral signals such as device metadata, spending frequency, and repayment history.

- Normalize and unify incoming data streams using middleware tools or vector databases (e.g., Pinecone) to reduce mismatches and duplications.

Unfortunately, integration is rarely plug-and-play. Every data source has its own format, update frequency, and naming convention. To overcome this:

- Implement semantic data models that allow for contextual mapping across providers.

- Design resilient pipelines that flag and quarantine anomalies before model training.

- Utilize tools like Airbyte or Apache Kafka to establish scalable ingestion pipelines that span multiple regions and products.

High-quality, real-time data isn’t just an engineering challenge; it’s the lifeblood of responsible, accurate AI systems.

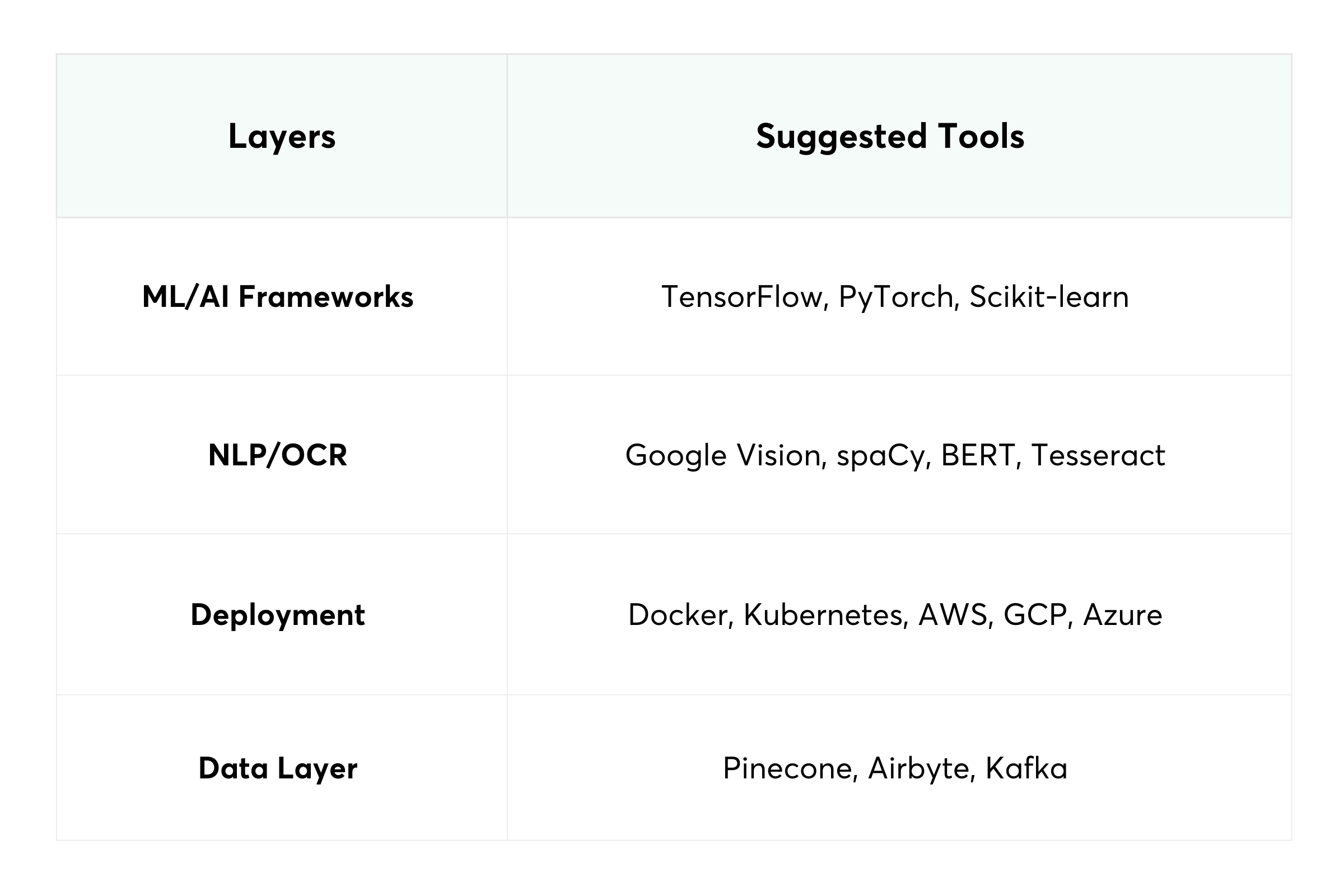

3. Build Modular, Scalable AI Infrastructure

FinTech products must be fast, flexible, and future-ready. A composable AI architecture allows companies to test, deploy, and scale different components independently.

Recommended Technology Stack:

Instead of building monolithic models, start with narrow use cases:

- Automate document verification.

- Flag fraudulent transactions based on device behavior.

- Score credit applicants using behavioral and cash flow indicators.

Once validated, replicate successful models across other verticals such as collections, wealth management, or risk pricing.

Composable AI systems are easier to monitor, audit, and improve without requiring the entire platform to be taken offline.

4. Make AI Explainable and Auditable by Design

As AI in the finance industry takes on high-impact decisions, such as approving or denying loans, setting interest rates, or flagging fraud, it must also be able to explain the reasoning behind those decisions.

Regulators now require transparency:

- The EU Artificial Intelligence Act categorizes financial scoring as “high-risk,” demanding traceability, logical documentation, and human oversight.

- The U.S. Consumer Financial Protection Bureau (CFPB) is increasingly scrutinizing AI-driven lending for bias, opacity, and denials issued without clear justification.

To ensure compliance:

- Utilize explainability frameworks such as SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) to visualize the features driving each decision.

- Include natural language summaries that explain decisions to customers.

- Maintain logs of all AI output, input, and underlying rationale for audit purposes.

Trust in AI grows when users and auditors can clearly understand it.

5. Create Feedback Loops and Adaptive Learning Systems

Unlike traditional software, AI systems must learn and evolve. User behavior, market conditions, fraud patterns, and regulations are in constant flux.

To maintain relevance:

- Build automated feedback loops into your AI lifecycle.

- Provide customers with the ability to contest and flag incorrect decisions, ensuring their feedback informs retraining datasets.

- Schedule model retraining every 30 to 90 days, depending on data volatility.

- Include human-in-the-loop checkpoints for edge cases, significant transactions, or disputes that require manual intervention.

More advanced systems also incorporate reinforcement learning, where models self-adjust based on ongoing user interaction and confirmed outcomes.

For visibility, use MLflow, Neptune.ai, or Weights & Biases to monitor:

- Model drift

- Feature importance evolution

- Comparative performance across versions

Feedback is not just a support function; it’s the mechanism that keeps AI accurate and ethical.

6. Build Cross-Functional, AI-Literate Teams

Successful AI implementation is never just a data science project; it demands seamless collaboration across technology, business, compliance, design, and legal.

Key team roles:

- Data Scientists to design, train, and validate models.

- Data Engineers to build data pipelines and handle ingestion.

- Product Managers to define problem scope, KPIs, and success metrics.

- Compliance Officers to ensure adherence to legal and ethical standards.

- UX Designers to present AI outcomes clearly and respectfully to users.

- AI Governance Councils to oversee model releases, approve bias testing, and define escalation pathways.

Invest in AI literacy across your organization. Everyone from sales to support should understand the basics of how AI impacts users, risk, and product design.

A cross-functional culture ensures that AI isn’t siloed; it becomes embedded in every decision.

7. Rigorously Test for Edge Cases, Market Shifts, and Failure Scenarios

One of the greatest risks in AI is brittleness. Models that perform well during testing may fail in real-world stress conditions.

Key testing strategies:

- Create sandbox environments where models can be tested safely against simulated economic events (e.g., inflation, recession, fraud spikes).

- Use A/B testing to compare new vs. old models in production.

- Monitor for demographic bias or geographic underperformance.

- Introduce adversarial examples to test model robustness.

- Implement automatic fallback logic if model confidence drops below a threshold.

For example, what happens if a borrower moves to another country, changes bank accounts, or experiences a data breach? Your AI should have tested logic for these scenarios.

Treat model testing like software QA with checklists, coverage metrics, and disaster drills.

8. Prepare Now for AI Regulation and Global Compliance

The regulatory landscape for AI is evolving, and FinTech will be one of the most heavily scrutinized sectors.

Globally, regulators are demanding:

- Complete documentation of AI systems and model use.

- Clear user disclosures during onboarding.

- Opt-out mechanisms for AI-based decisions.

- Dispute resolution pathways.

- Audit logs of input-output pairs and training data lineage.

Examples:

- EU AI Act (2024): Strict risk categories, documentation, human oversight, and penalties up to €30M or 6% of global revenue.

- CFPB U.S: Requires explainability, nondiscrimination, and customer transparency in algorithmic lending.

- UK FCA and Singapore MAS have issued guidance on “explainable AI” and “ethical AI practices.”

To prepare:

- Draft internal AI policies and risk assessment documents.

- Build a model registry and release audit system.

- Maintain compliance dashboards for all AI modules.

Being AI-ready is not just about technology; it’s about defensibility and accountability.

Common Pitfalls to Avoid While Implementing AI Banking Solutions

Many AI initiatives stall or fail due to common avoidable issues:

- Overfitting to historical data without adapting to future market behavior.

- Lack of explainability resulting in regulatory exposure and loss of user trust.

- Siloed architecture that prevents full integration of insights across functions.

- Lack of governance, leading to model bias, misuse, or legal risk.

- Rushed deployment without testing for edge cases or abnormal behavior.

Avoiding these pitfalls requires not only smarter technology but also a smarter strategy.

Conclusion: AI Is Not a Feature, It’s a FinTech Foundation

Artificial intelligence is no longer an optional add-on for modern FinTechs; it is the core engine driving personalized experiences, risk decisions, and operational efficiency. The winners in the coming decade will be those who treat AI as a company-wide capability, not just a technology.

Getting there demands more than models. It requires:

- End-to-end data pipelines

- Clear governance

- Ethical frameworks

- Adaptive infrastructure

- Transparent interfaces

- And above all, cross-functional, mission-driven teams

When applied thoughtfully, AI in financial services does more than cut costs or save time; it builds trust, broadens access, levels the playing field, and transforms raw data into informed decisions that empower people.

At EvinceDev, we’ve helped global FinTechs across five continents build AI-native systems with explainability, speed, and scale. Whether resolving data silos using semantic search or deploying modular ML models for underwriting, our mission remains the same: help financial platforms win with intelligence, ethics, and agility.