Key Takeaways:

- Visual Discovery: Use image-led search plus visual recommendations so shoppers find relevant products faster than keyword-only search.

- Virtual Reality: VR showrooms let buyers explore products in immersive spaces, improving confidence for high-ticket and complex items.

- Augmented Reality: AR places products in a real environment or on a user, helping validate size, style, and fit before purchase.

- AI & Chatbots: AI chatbots guide discovery with intent questions, product comparisons, and personalized picks tied to visual signals.

- 3D Product Views: Interactive 3D models boost PDP engagement by letting users rotate, zoom, and inspect product details pre-purchase.

- Try-On Confidence: Virtual try-ons cut purchase doubt by showing fit and look in real context, boosting conversions and reducing returns.

- Seamless Checkout: Reduce steps with saved details, smart autofill, and clear delivery info so buyers complete purchases faster with less drop-off.

- Reduced Returns: Better previews plus accurate recommendations reduce wrong-size and wrong-style orders, lowering return rates and costs.

- Deeper Connection: Visual-first journeys feel personal, increasing trust, repeat visits, and loyalty through richer shopping experiences.

The way people shop online has fundamentally changed. Customers no longer browse with patience; they expect immediacy, relevance, and confidence before making a purchase. Yet traditional eCommerce experiences are still largely built around static images, text-heavy descriptions, and keyword-based search systems that fail to reflect how humans actually discover and evaluate products.

Shopping is inherently visual. In physical stores, customers touch fabrics, examine textures, try products on, compare colors under different lighting, and imagine how items fit into their lives. Replicating this experience online has been one of the biggest challenges in digital commerce.

Visual AI is emerging as a powerful solution to this gap. In practice, visual AI eCommerce capabilities help brands replicate real-world evaluation online by turning images into signals for discovery, confidence, and personalization. By enabling machines to understand images and visual context, eCommerce platforms can move beyond flat catalogs and deliver immersive, intuitive, and confidence-driven shopping experiences.

Technologies like virtual try-ons, 3D product models, and image recognition are redefining how customers discover products, evaluate options, and make buying decisions, especially through experiences like visual search in ecommerce, where shoppers search for products by images rather than keywords.

This blog explores how Visual AI is transforming eCommerce, breaking down its core pillars, real-world use cases, and the business value it delivers.

Why eCommerce Is Becoming Visual-First?

Traditional eCommerce relies heavily on text. Product titles, bullet points, filters, and keyword search have long been the backbone of online shopping. While functional, these tools struggle to support intent-driven discovery. Shoppers often do not know the exact words to describe what they want. They may remember how a product looked, not what it was called.

This creates multiple friction points:

- Keyword search returns irrelevant or overwhelming results

- Customers struggle to visualize fit, scale, and appearance

- Uncertainty leads to cart abandonment or high return rates

- Merchandising teams spend heavily on photoshoots and content updates

Visual AI addresses these issues by shifting the experience from text interpretation to visual understanding. Instead of forcing users to adapt to rigid systems, Visual AI allows platforms to adapt to how people naturally shop.

This shift explains the growing adoption of visual search eCommerce, which removes the need for precise keywords and lets shoppers find products based on appearance, style, and context.

Quick Stat:

Studies show that products featuring 3D or AR content can achieve significantly higher conversion rates than static images alone, with increases of 94–250 %.

Understanding Visual AI in the Context of eCommerce

Visual AI refers to a set of artificial intelligence technologies that enable systems to analyze, interpret, and act on visual data such as images, videos, and live camera inputs. In eCommerce, Visual AI connects product content, customer behavior, and visual perception into a unified experience.

At a high level, Visual AI in eCommerce is built around three core capabilities:

- Virtual Try-Ons that simulate real-world interaction

- 3D Models that replace static product images with interactive visuals

- Image Recognition that powers visual search, tagging, and discovery

Together, these capabilities improve discovery, build trust, and scale product content creation across large catalogs.

Virtual Try-Ons: Bringing the Fitting Room Online

What Virtual Try-Ons Are

Virtual try-ons allow shoppers to see how a product looks on them or in their environment before purchasing. Using a smartphone camera or an uploaded image, AI systems realistically overlay products, adjusting for size, shape, lighting, and movement.

Virtual try-ons are commonly used in:

- Apparel and footwear for size and fit visualization

- Eyewear for face alignment and proportions

- Beauty products like lipstick, foundation, and hair color

- Accessories such as watches and jewelry

- Furniture and home decor for room placement

These experiences reduce the guesswork that often prevents customers from completing a purchase. Many retailers work with AI app development services to integrate camera-based try-ons into mobile apps while meeting performance and privacy requirements.

How Virtual Try-Ons Work

Behind the scenes, virtual try-ons rely on several AI techniques working together:

- Computer vision models detect key landmarks such as facial features, body joints, or room geometry

- Image segmentation separates the user from the background

- Pose estimation tracks movement and angles

- Rendering engines adjust lighting, shadows, and textures for realism

Advanced systems also account for diversity in skin tones, body shapes, and lighting conditions to avoid inaccurate or biased results.

Business Impact of Virtual Try-Ons

Virtual try-ons deliver measurable benefits across the customer journey:

- Higher conversion rates due to increased buyer confidence

- Lower return rates, especially in apparel and beauty categories

- Longer engagement time and stronger brand differentiation

- Better data on customer preferences and fit issues

For retailers, this translates into reduced operational costs and improved customer satisfaction.

Best Practices for Adoption

Successful virtual try-on implementations follow a phased approach:

- Start with high-impact categories such as top-selling SKUs or high-return products

- Set realistic expectations by clearly communicating accuracy limitations

- Optimize for mobile-first usage since most try-ons occur on phones

- Track metrics such as try-on usage, conversion lift, and return reduction

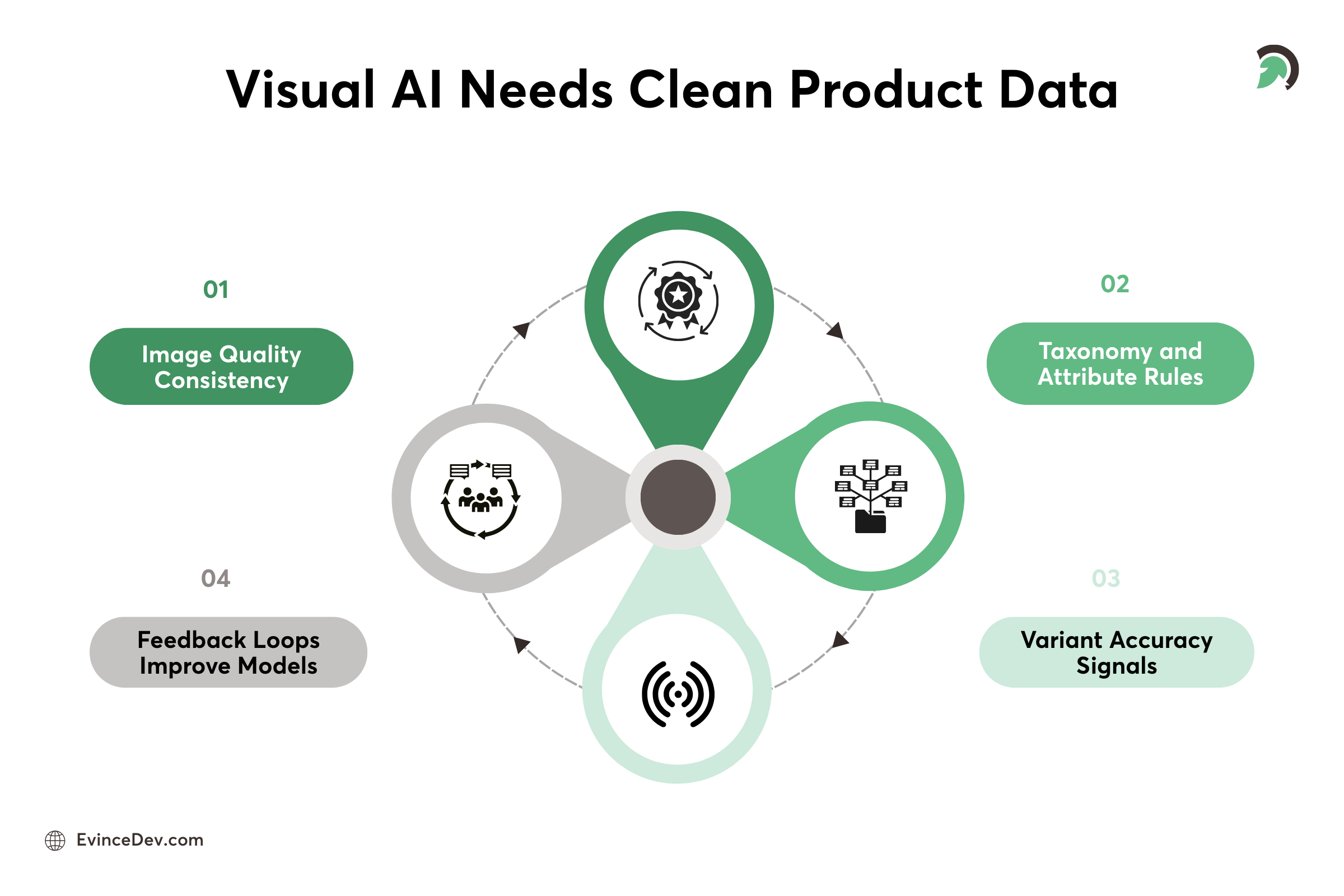

Where Visual AI Can Fall Short Without Strong Product Data?

The reality is, Visual AI can offer significantly improved discovery and purchasing confidence, but only as good as the offering content and data the model is trained on. Some teams start with a focus on the consumer experience and then find the toughest challenges are the operation-related ones: where images are imperfect, attribute information is messy, and variant information is missing.

Below are the most fundamental foundations for making or breaking the results of Visual AI:

-

Image Quality and Consistency

Visual AI models depend on clear, consistent product imagery. If photos vary widely in lighting, angles, backgrounds, or resolution, the system struggles to identify true product features versus noise. Standardized photography guidelines, consistent framing, and high-resolution images improve visual search relevance, auto-tagging accuracy, and recommendation quality.

-

A Clean Taxonomy and Attribute Governance

While machines can pick attributes, there still has to be a “language” structure in which these can be assigned. If there’s ambiguity in the organization and definitions of these features, it leads to inaccuracies in output, as it will apply different parameters to different items in various categories, as well as be confusing in filters and search results. Creation of terms within the lexicon will prevent Visual AI results from deviating from how navigation is in the catalog.

-

Variant Accuracy (color, material, and size signals)

Variants are a frequent failure point. If a catalog lists “Navy” in one place and “Midnight Blue” in another, or if material fields are inconsistent, Visual AI can surface the wrong alternatives, mismatched recommendations, or incorrect “similar items.” Aligning variant naming, mapping material types, and ensuring accurate size data improves both try-on realism and discovery precision.

-

Feedback Loops that Keep Models Aligned with Reality

Visual trends change quickly, and catalogs evolve constantly. The best Visual AI systems incorporate continuous feedback loops using signals like search refinements, add-to-cart patterns, return reasons, and customer reviews. This helps models improve over time, correct edge cases, and stay aligned with customer expectations.

The takeaway: before scaling Visual AI across the entire storefront, invest in the content and data foundation. When imagery, taxonomy, variants, and feedback loops are in place, Visual AI performs more accurately, feels more trustworthy, and delivers stronger business impact.

3D Models: Replacing Flat Images with Interactive Product Experiences

Why 3D Product Models Matter

Static product photos offer a limited perspective. Customers can see only what the brand chooses to show. This limitation becomes more problematic for high-consideration purchases where size, structure, and material details matter.

3D models allow shoppers to:

- Rotate products from any angle

- Zoom in on details like stitching or texture

- Understand proportions and scale more accurately

- Interact with configurable options such as colors or components

This level of interaction builds trust and reduces post-purchase regret.

How Brands Create 3D Assets at Scale

Modern 3D pipelines are no longer limited to manual design teams. AI-assisted workflows make large-scale adoption feasible:

- CAD files from manufacturing are repurposed for commerce

- AI automates texture mapping, lighting presets, and background generation

- A single 3D model generates multiple outputs, including images, 360-degree views, AR previews, and videos

This approach dramatically reduces dependency on traditional photoshoots and speeds up time-to-market.

Use Cases for 3D in eCommerce

3D product models support a wide range of applications:

- Configurators that allow customers to customize products

- AR previews that place items in real-world environments

- Virtual showrooms for immersive brand storytelling

- Faster merchandising for seasonal or regional variations

Retailers with complex catalogs benefit the most from this flexibility.

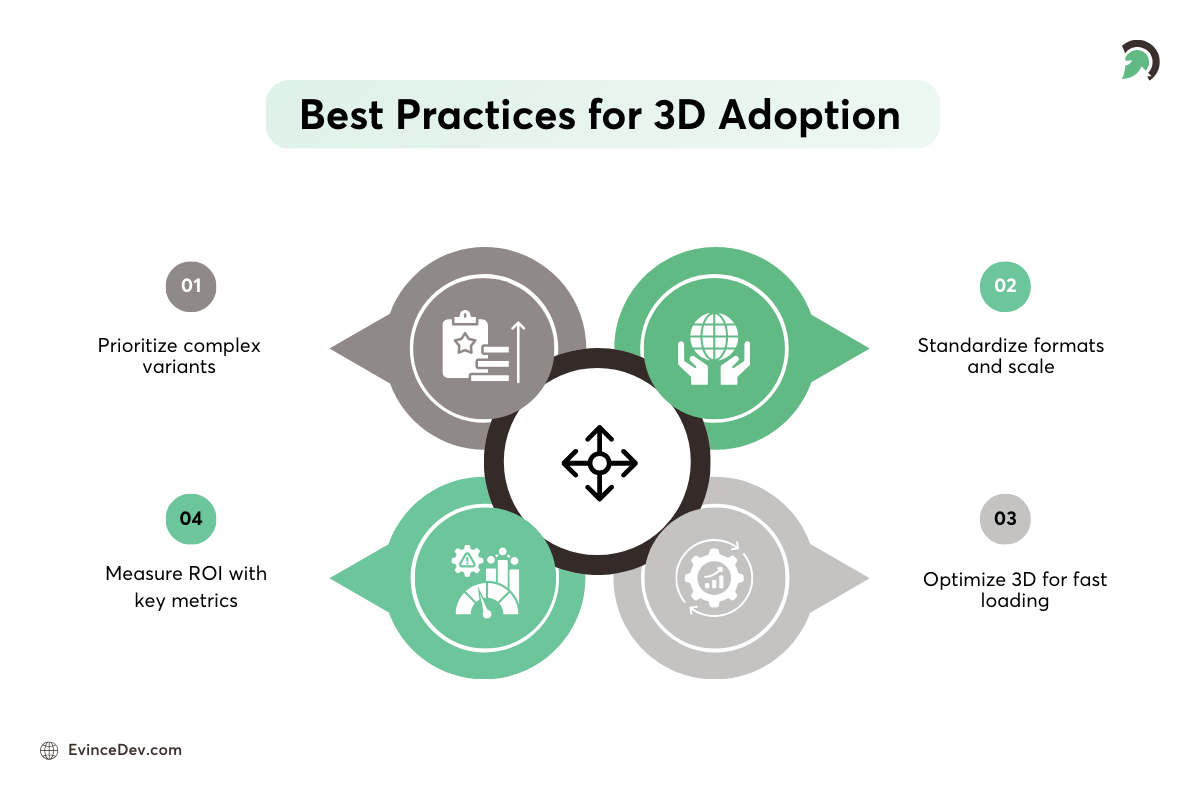

Best Practices for 3D Adoption

To maximize ROI from 3D:

- Prioritize products with multiple variants or high visual complexity

- Standardize file formats, naming conventions, and scale accuracy

- Ensure performance optimization to avoid slow page loads

- Measure impact using conversion rates, content production costs, and engagement metrics

Image Recognition eCommerce: Redefining Product Discovery

Visual Search: Finding Products Through Images

By enabling visual search for eCommerce, retailers let shoppers start with inspiration and quickly narrow to products that match shape, color, pattern, and overall style. Instead of guessing keywords, users search with what they see.

This capability is particularly valuable for:

- Fashion and lifestyle inspiration

- Social media-driven discovery

- Home decor and interior styling

- Trend-based or impulse purchases

Visual search aligns discovery with natural human behavior.

Automated Tagging and Catalog Enrichment

Image recognition also plays a critical role behind the scenes. AI models analyze product images to extract attributes such as:

- Color, pattern, and texture

- Shape, style, and material

- Category-specific features like sleeve type or neckline

Automated tagging improves catalog consistency, filter accuracy, and search relevance, reducing manual effort and errors.

Visual Recommendations and Personalization

By understanding visual similarity, AI can recommend products based on style rather than just past clicks. This enables:

- “Shop the look” experiences

- Outfit and room completion suggestions

- Discovery of complementary items

Visual recommendations increase average order value and keep users engaged longer.

Best Practices for Image Recognition

Effective image recognition systems require:

- High-quality product imagery as training data

- A well-defined taxonomy and attribute hierarchy

- Human-in-the-loop validation for critical categories

- Continuous retraining to adapt to trends and catalog changes

Key metrics include search success rates, zero-result searches, and revenue driven by visual discovery.

Implementing Visual AI Without Disrupting Operations

For teams already investing in eCommerce development services, Visual AI features can be integrated incrementally without rebuilding the entire storefront. The right eCommerce development solutions make it easier to connect Visual AI features to your PIM, search stack, analytics, and experimentation tooling.

Phase 1: Quick Wins

- Automated image tagging

- Visual search for a subset of the catalog

- Basic similarity recommendations

Phase 2: Experience Enhancements

- Virtual try-ons for one category

- 3D models for top-performing products

- Interactive configurators

Phase 3: Scaling and Optimization

- End-to-end 3D content pipelines

- Advanced personalization using visual signals

- Expansion across categories and regions

This approach allows teams to prove ROI early and scale confidently.

Data, Privacy, and Ethical Considerations

Visual AI often uses sensitive inputs, especially when it involves cameras, live video, or user-uploaded photos. Because these experiences feel personal, trust and transparency matter as much as accuracy.

Retailers should prioritize:

- Transparent consent and clear disclosures: Make it obvious when the camera is used, what is captured, how it is processed, and whether anything is stored or used to improve models. Offer simple opt-in and opt-out choices.

- Secure storage and minimal retention: Store as little data as possible for the shortest time possible. When storage is needed, use encryption, strict access controls, and clear retention and deletion policies.

- Inclusive model performance: Ensure try-ons and recognition work well across skin tones, body types, face shapes, lighting conditions, and device quality. Regular testing and edge-case reviews help reduce bias.

- Regulatory compliance by design: Build workflows that support user rights (like deletion requests) and meet regional privacy requirements from day one.

Measuring ROI: Metrics That Matter

To prove Visual AI is delivering value, measure impact across the full funnel, not just feature usage. The goal is to connect visual experiences to outcomes like revenue, cost savings, and customer confidence. This is exactly what decision makers evaluate when choosing retail ecommerce solutions that justify investment and scale.

Track these core dimensions:

- Conversion rate and add-to-cart lift: Compare sessions in which shoppers used try-on, 3D view, or visual search vs. those who did not. Also, watch assisted conversions, since visual features often influence decisions even if the purchase happens later.

- Return rate reduction and return reasons: Visual AI should reduce “not as expected” returns, especially for mismatches in fit, color, size, and material. Monitor return reasons to spot where the experience still needs improvement.

- Engagement time and bounce rate: Look at time spent on product pages, interaction depth (rotations, zooms, try-on events), and changes in bounce rate. Higher engagement is useful only if it correlates with better conversion or lower returns.

- Visual search usage and success rate: Track adoption (how many users try it), success (product clicks after search), and quality signals like fewer refinements and fewer zero-result searches. To evaluate visual search eCommerce performance, focus on click-through after image searches and a steady decline in zero-result sessions, which indicates discovery is improving.

- Content production time and cost per SKU: For 3D and AI-driven visualization, measure operational ROI: time-to-publish for new products, cost per variant asset, and how quickly teams can refresh content without full photoshoots.

The Future of Visual AI in eCommerce

Visual AI is not a short-term trend; it is becoming a core expectation of modern online shopping. As computer vision models become more accurate and real-time rendering becomes lighter and faster, visual-first commerce will move from a differentiator to the baseline. Shoppers will increasingly expect to search with images, preview products in context, and validate fit or appearance before they buy. Brands that invest early will not just improve their storefront experience; they will redefine what “good discovery” looks like by setting higher standards for relevance, confidence, and speed.

At the same time, content pipelines will shift dramatically. As automation improves, teams will be able to generate consistent product visuals at scale, build 3D assets once and reuse them across the board, and keep catalogs up to date without relying on constant reshoots. This will shorten time-to-market, improve catalog quality, and make personalization more responsive to trends and customer behavior.

The goal is not to replace creativity, but to augment it. Visual AI handles the repeatable, high-volume work like tagging, enrichment, variant generation, and similarity matching, so human teams can focus on what machines cannot: brand storytelling, merchandising strategy, campaign narratives, and customer relationships. In the long run, the winners will be brands that use Visual AI to reduce friction and uncertainty while using human creativity to build trust, differentiation, and emotional connection.

Conclusion

Visual AI is transforming the eCommerce industry by allowing the online shopping experience to match human perception. Virtual try-on increases certainty. 3D models inspire trust. Image recognition makes discovery easier. All of these are now creating experiences that are natural, immersive, and trustworthy. For brands operating in increasingly competitive marketplaces and with increasingly high customer expectations, Visual AI represents an opportunity to stand out with truly tangible value. The greatest successes will be incremental, impact-focused, and purposefully scaled. If you are exploring how to bring Visual AI into your product discovery or content pipeline, an eCommerce development company like EvinceDev can help assess readiness, identify high-impact use cases, and support a practical rollout from pilot to scale.

The future of eCommerce is visual. The question is no longer whether to adopt Visual AI, but how quickly brands can turn visual intelligence into a competitive advantage.